To download COURSERA.ORG courses one subscribes to, either one writes its own bot, which will have to solve the authentication challenge and be able to crawl, identify and fetch all the relevant course files, or one learns to use the “COURSERA-DL” free and open source project (FOSS), mostly written in the language Python, available from:

https://github.com/coursera-dl/coursera-dl/

The first option is great for learning the correspondent skills, but it is hard work.

The second option is immediately available and is much more sensible for instantaneous results, mainly for those who are only focused in getting the course materials, for offline studying.

This post is about installing and using COURSERA-DL. The post assumes “Python” is properly installed. The commands shown were tested on a Python installation on Windows 10.

To install or update COURSERA-DL, the following sequence of commands will work. Enter the commands from any command-line console (CMD.EXE on Windows). Even if COURSERA-DL is already installed, it will remain so, keeping its configuration, and it will only be updated. The commands go a bit beyond COURSERA-DL, because I also care about EDX courses.

One project similar to COURSERA-DL is EDX-DL, for courses at EDX.ORG. Both learning sites have materials on YOUTUBE.COM, so yet another related FOSS is YOUTUBE-DL.

python -m pip install --upgrade pip

pip install --upgrade coursera-dl

pip install --upgrade edx-dl

pip install --upgrade youtube-dl

Once these FOSS solutions are made available on the system, they can be called from the command-line.

To know the technical name of a COURSERA.ORG course, pay attention to its URL, when learning in a browser session. For example, when starting to learn the Coursera course named “Build a Modern Computer From First Principles”, the URL is

https://www.coursera.org/learn/build-a-computer/home/welcome

The technical name is “build-a-computer“, i.e., the string after “https://www.coursera.org/learn/” and before the subsequent forward-slash (“/”). This parsing rule should work for any course.

To download a COURSERA.ORG course named “XPTO”, logging-in as “user@email.com”, having password “1234”, in theory, it should suffice to launch a command-line window (CMD.EXE on any Windows) and enter:

coursera-dl -u "user@email.com" -p "1234" "XPTO"

These days, this will probably FAIL, due to the introduction of CAPTCHAS which defeat many bots.

As of February 2021, COURSERA-DL does NOT defeat the COURSERA CAPTCHA, about picking images which solve some challenge. Defeating CAPTCHAs can be quite a project on its own, so it is understandable that this is happening. The workaround is easy, but not automatable.

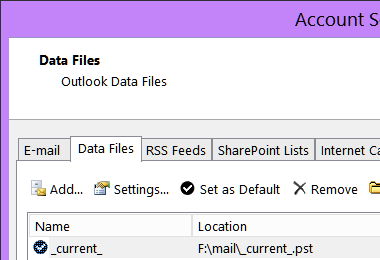

For each COURSERA.ORG course you are subscribed to, when you use a web browser to learn it, a cookie named “CAUTH” for domain “.coursera.org” is created on the local computer. In my case, I always use Firefox and the extension “cookie quick manager”, to see the cookies for domains. Using that extension, or equivalent, just observe, text-select, and copy the string value for the CAUTH cookie, which can be a long string (hundreds of chars).

Then, provide the value of that string upon calling COURSERA-DL:

coursera-dl -u "user@email.com" -p "1234" "XPTO" -ca "hundreds of chars go here"

That is it.

For a better workflow, find the folder where the Python script for coursera-dl is; i.e. search for the local file “coursera-dl.py“.

If you have Python installed at

c:\python

the file will be at

c:\python\scripts

In the scripts folder, create a NEW text file named “coursera.conf“, consisting of the sensitive data and other eventual arguments you can learn about by reading COURSERA-DL’s documentation.

For example:

-u "user@email.com" -p "1234" --subtitle-language en --download-quizzes

The text above is the content inside the text file “coursera.conf“, saved in the same folder that contains the coursera-dl.py script.

Now, to download course “XPTO”, just do:

coursera-dl "XPTO" -ca "hundreds of chars go here"